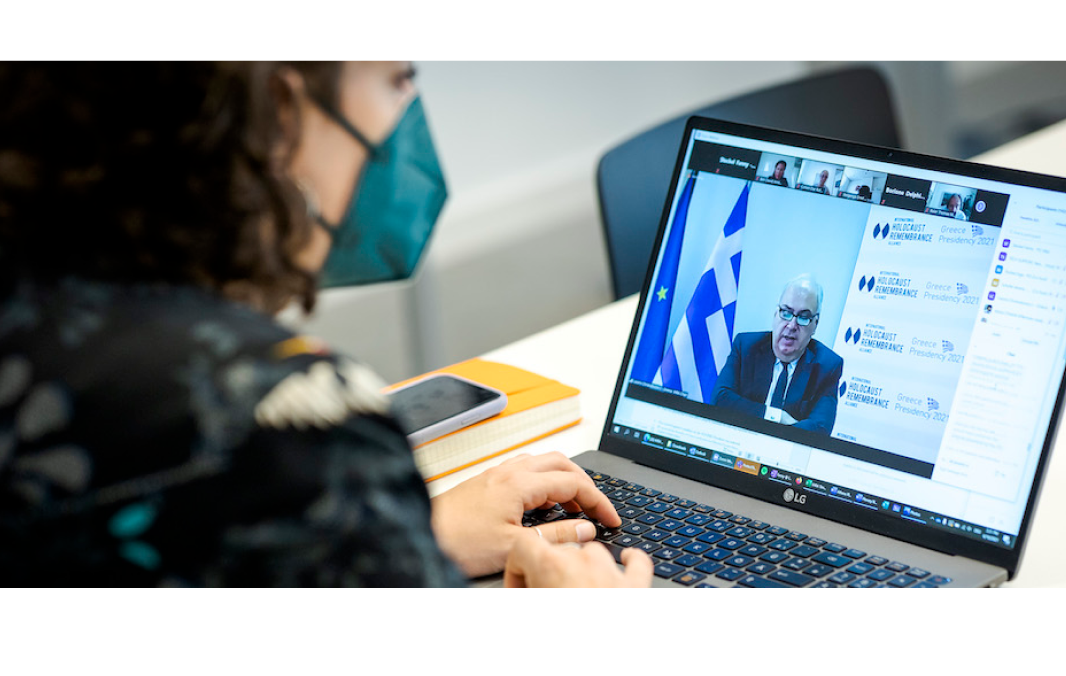

Using Artificial Intelligence: detecting antisemitic content and hate speech online

Making the online global community more inclusive and tolerant requires efficient ways to accurately detect toxic speech patterns to counter them. The international community has put considerable effort into developing technological models that detect hateful content online. The annotated Twitter data set recently developed by the Institute for the Study of Contemporary Antisemitism (ISCA) is a valuable addition to these models. It uses the working definition of antisemitism adopted by the International Holocaust Remembrance Alliance (IHRA), which has been endorsed by more than 30 governments and international organizations, to help automated detection models better understand what antisemitic tweets can look like, and in turn flag antisemitic content. The project uses the working definition to classify tweets, placing them on a wide-ranging scale labeling various degrees of antisemitic content.

The annotated Twitter dataset: automated detection of evolving antisemitic content

The annotation team used Indiana University’s Observatory on Social Media (OSoMe) database to find conversations surrounding “Jews,” “Israel,” and “antisemitism.” The dataset uses an annotation portal to classify tweets in their natural context and meaning. It also considers threads, images, videos, text, Twitter IDs, links, and other accompanying information to holistically understand the message being conveyed.

The annotation portal processed a total of 6,495 tweets from January 2019 to December 2021, many of which also used derogatory, antisemitic slurs. Using a detailed annotation guideline based on the IHRA’s working definition of antisemitism to assess the tweets, the project team found that 18 percent of the selected tweets could be labeled antisemitic, showing the vast prevalence of biased content and conspiracy theories online.

The development of this Twitter dataset is part of a larger movement of improving automated detection of hate speech online and training AI models on them. The annotation team working on the dataset has made the Twitter ID’s, tweets, and their labels available, initiating a global conversation on recognizing changing forms of antisemitic content online. Later this year, the team will add the label of calling out antisemitism to the dataset, which will equip online communities with a practical tool to counter antisemitic speech.

The annotation process encourages annotators to apply the IHRA’s widely used working definition of antisemitism consistently, which is meant to be used as a practical tool to understand and monitor changing forms of antisemitic content.

The IHRA’s working definition of antisemitism: a practical tool to identify various antisemitic attitudes

The IHRA working definition of antisemitism was developed by an interdisciplinary group of experts and IHRA Member Countries as a practical tool to identify complex forms of antisemitism. As countering antisemitism is central to the IHRA’s mandate, the definition encourages collective solutions to the global spread of antisemitic messages. The working definition is therefore a significant resource for projects improving automated identification of antisemitic content endangering democratic freedoms on a global scale. Antisemitic content changes over time, and the definition advances an approach that holistically assesses hateful content to counter new forms of hate speech.

The flexible nature of the IHRA’s working definition of antisemitism furthers the accuracy of the Twitter dataset, allowing annotators to explore varying degrees of negative or positive sentiments expressed in the tweets. The process also allows for the working definition to be agreed or disagreed with on a case-by-case basis to encourage its accurate application. This helped the team consider a range of negative or positive sentiments evoked by tweets even if clear visual evidence for or against an antisemitic attitude could not be seen. This especially applies to tweets which use sarcastic or evasive language and include distortive Holocaust comparisons to contemporary issues.

Automatic detection of hate speech is indispensable to mitigating it in the future. However, these models are not perfect and must be part of a larger framework of human review and oversight. It is also important to ensure that these models are trained in diverse, representative data and that their results are interpretable and transparent. This is why the annotation teams within the ISCA project met on a weekly basis to discuss tweets to better understand the varying contexts of online conversations and increase annotation reliability.

The future of automated detection of antisemitic speech: countering Holocaust distortion online

Holocaust distortion has increased since the COVID-19 pandemic. Thus, the Twitter dataset also contains a Holocaust distortion label to identify and counter it.

As the global online community grows, so does the need to properly identify hate speech and antisemitic and distortive content online. Given how these messages and attitudes assume various forms in a constantly changing world, the development of flexible and practical tools like the IHRA working definitions and the Twitter dataset are invaluable to creating a more inclusive global community.

Sign up to our newsletter to

receive the latest updates

By signing up to the IHRA newsletter, you agree to our Privacy Policy